AI は連邦政府のサイバーセキュリティにおいて急速に進化しています。十分な速さでセキュリティを確保できていますか?

最近のFedInsider ウェビナーで、私が聞いた話の中で、今でも疑問に思う話を共有しました。

連邦政府の CIO は、慎重に範囲指定、レビュー、承認された AI システムの導入を準備していました。

書類上では、すべてが正しく見えました。それから彼らはテストを始めました。

AI は、対象範囲内にあることを意図していなかったデータ ソースを使用して、認識するはずのない回答を返し始めました。

悪意のあることは何も起こっていませんでしたが、セキュリティ上のリスクは現実のものであり、差し迫っていました。もしそのシステムが稼働していたら、結果は悪かったでしょう。

このストーリーは、多くの連邦政府機関が現在 AI をどのように活用しているかを物語っています。彼らはそうしなければならないので、急いで動いているのです。しかし、可視性と制御のないスピードは、新しい能力を生み出すのと同じ速さで、新しいリスクを生み出します。

AIはすでに政府の環境に組み込まれています。問題は、それを意図的に保護しているのか、それとも事後にセキュリティへの影響を発見しているのかということです。

政府環境におけるAIは誰にとっても戦略的優位性となる

AI はオプションではないというレベル設定が重要だと思います。

攻撃者はすでにAI を活用して、偵察を自動化し、フィッシング キャンペーンを改良し、音声を模倣し、マルウェアを生成し、人間のチームが実行できるよりも速く攻撃を拡大しています。

つまり、ディフェンダーはこれを見逃すことはできない。

同時に、連邦政府機関は、ハイブリッド アーキテクチャやレガシー システムなど、これまで以上に複雑な環境に対処しています。

テレメトリの量だけでも圧倒的です。人間は、そのすべての情報を意味のある形で処理することはできません。

AI が役立つのは、私たちにはできないことができるからです。つまり、膨大な量のデータをリアルタイムで分析し、本当に重要なことを明らかにするのです。

しかし問題は、防御側が干し草の山から針を見つけるのを助ける同じ AI が、干し草の山自体も拡大してしまうことです。

すべての AI モデルは別のアプリケーションです。すべての AI パイプラインは別の接続セットです。すべてのデータ ソースは、悪用される可能性のある別の経路となります。

これらのパスを制御しなければ、AI はリスクを軽減するのではなく、リスクを加速させます。

AI主導の連邦環境で従来のセキュリティガードレールが機能しない理由

連邦政府のサイバーセキュリティリーダーにとって受け入れるのが最も難しい真実の 1 つは、現在のガードレールが十分ではないということだ。

長年にわたり、サイバーセキュリティの成功は予防によって測定されてきました。目的は、攻撃者を締め出し、境界を強化し、侵入を阻止することでした。

しかし、フォーチュン 500 企業から連邦政府機関に至るまで、侵入は依然として発生しています。そして、私たちの最善の努力にもかかわらず、それらは起こります。

AI はその力学を変えるのではなく、むしろ強化します。

AI システムはサーバーとコード上で実行され、データ アクセスに依存します。そのため、他のアプリケーションと同様に、 横方向の移動、構成ミス、過剰な権限によるアクセスに対して脆弱になります。

さらに悪いことに、多くの組織は依然として、私が「トゥーツィー・ポップ」モデルと呼ぶ、外は硬く、内は柔らかいモデルで運営されています。攻撃者が侵入すると、自由に移動できるようになります。

それは AI システムにとって悲惨なことです。

AI エンジンが内部システム、機密データ、または外部リソースに自由にアクセスできる場合、必要以上に AI エンジンを信頼していることになります。AI は外部の脅威、内部の脅威、そして多くの場合 AI 自身からも保護される必要があります。

可視性は連邦システムにおけるAIセキュリティの基盤である

AI を保護する前に、環境を明確に把握する必要があります。

当たり前のことのように聞こえますが、多くの場合、そうではありません。

私は CIO として何年もの間、システムがどのように接続されるかを示すわかりやすい Visio ダイアグラムを見てきました。しかし、現実は決して図と一致しませんでした。

現代の連邦環境は動的かつ常に変化しています。AI はここで役立ちますが、 可視性が優先される場合に限られます。

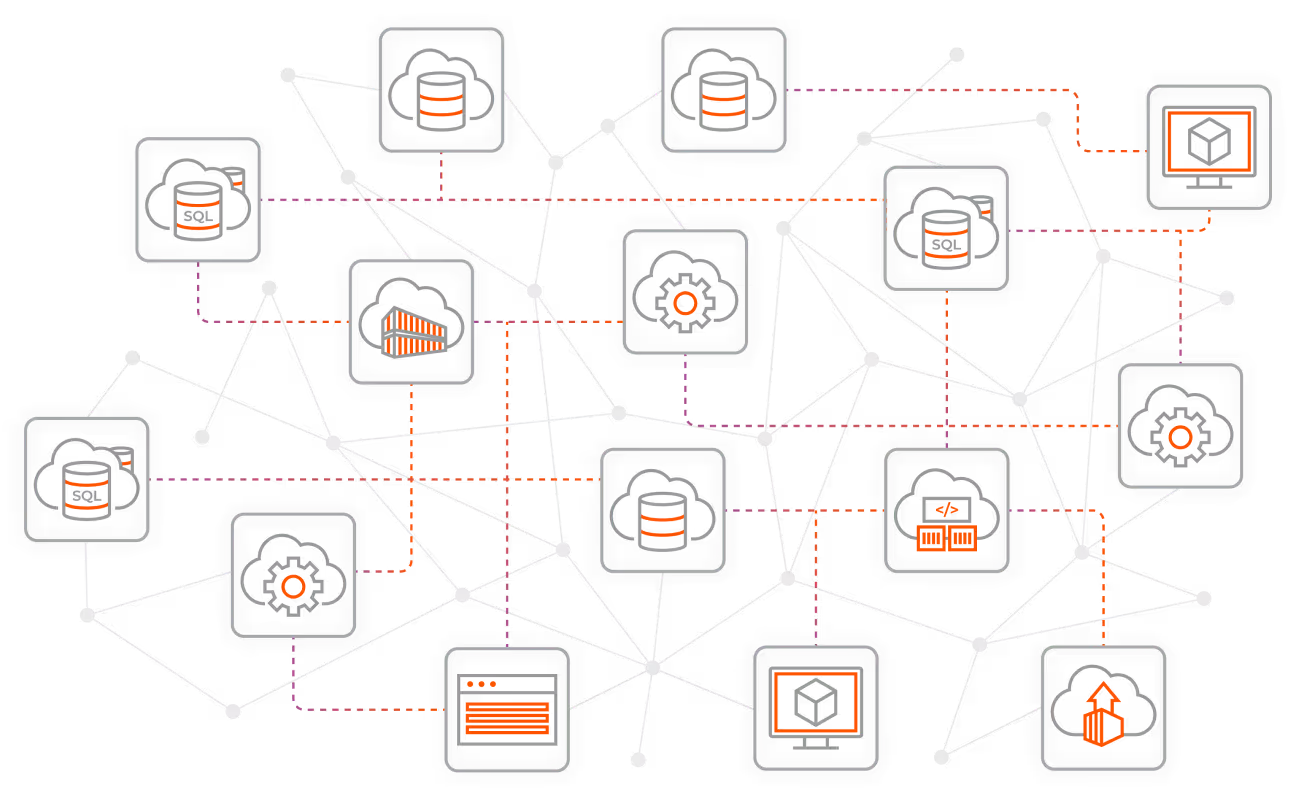

AI は機関に環境の 3 次元ビューを提供します。これは、 観測可能性とも呼ばれます。資産やアラートだけでなく、システム間の関係、それらの通信方法、実際に重要な点も明らかにすることができます。

AI が、本来流れるべきでない国に流れるトラフィックや、正当な理由なくインターネットに接続しているサービスを強調表示する場合、それは実用的な洞察となります。そして、それは数週間後の事後検証時ではなく、今すぐに行う必要があります。

リアルタイムの可視性により、AI は目新しいものから防御機能へと変わります。

連邦政府のAIを保護するにはゼロトラストは必須

私の意見では、 ゼロ トラストのない AI セキュリティは希望的観測です。

ゼロ トラストは、「侵害は起こるだろう」という単純な仮定から始まります。目標は、その影響を最小限に抑えることです。

ということは:

- 明示的に必要な接続のみを許可する

- デフォルトで他のすべてをオフにする

- 重要なシステムの周囲に明確な保護境界を描く

- 境界だけでなく内部でも制御を実施する

AI システムの場合、これはさらに重要です。

AI モデルと何が通信できるか、また AI モデルが何と通信できるかを制御する必要があります。これには、受信および送信通信、トレーニングおよび推論データ、内部システム、外部ソースが含まれます。

ゼロ トラスト戦略の一環としてセグメンテーションを行わないと、AI の侵害が企業の侵害になってしまいます。しかし、セグメンテーションによって妥協は抑えられます。

これは、連邦政府機関が AI モデルを構築する際に、侵害に対する不安から侵害に対する耐性へと移行する方法です。

AIは決して「設定して忘れる」ようなものではない

私が目にする最大の誤解の 1 つは、AI が運用上の問題を独自に解決するという考えです。

そうではありません。

AI にはガバナンス、監視、継続的な検証が必要です。データ品質の低さ、過剰なアクセス、所有権の不明確さはすべて、AI の成果を損ないます。

すでに技術的負債がある場合、AI ではそれを解決できません。ただ暴露されるだけだ。

連邦政府機関は、ゼロトラスト戦略に基づいたミッションクリティカルなシステムと同じ方法で AI の導入に取り組む必要があります。

その規律こそが、有用な AI と危険な AI を区別するものです。

AIは適切なガードレールによって連邦政府のサイバーセキュリティを強化できる

リスクがあるにもかかわらず、私は AI に対して楽観的です。

AI は、連邦政府のサイバーセキュリティ チームを劇的に強化し、アラート疲労を軽減し、意味のあるコンテキストをより早く明らかにすることができます。これにより、人員配置の課題やリソースの制約にもかかわらず、代理店が競争できるようになります。

正しく使用すれば、AI は労働力の延長となります。人間の判断を置き換えるのではなく、人間の判断を補強するものです。これにより、熟練した専門家は、データに溺れることなく意思決定に集中できるようになります。

しかし、これが機能するのは、AI が意図的に導入され、適切に保護され、ゼロ トラストの原則に準拠している場合のみです。

これは、AI が連邦政府のセキュリティ体制を定義する前に、連邦政府のリーダーが遵守すべき基準です。

Illumio Insightsを無料でお試しください リスクを理解し、脅威を封じ込め、AI 主導の環境を保護するために必要な可視性とコンテキストを取得します。

.webp)

.webp)

.webp)